AI bots are dramatically failing to reflect diversity in contemporary British society.

After my experience trying to create realistic images of nurses for a commercial project, I have delved deeper into bias in generative AI. And found it failing.

Amazingly for a technology which has developed in the 2020s, it is totally adrift of modern social realities.

AI thinks lawyers and doctors are men. In fact most lawyers are women, and it’s pretty balanced for doctors.

And given that most women don’t even enter the law until they are in their thirties, the “average” female lawyer is unlikely to look like this.

In fact, two leading AI bots – Midjourney and Copilot – both thought lawyers are men in book-lined 20th century boardrooms. Only Copilot included a woman – and predictably given my experience with nurses – it was the attractive young woman pictured here.

Neither of the two bots included any ethnic minority lawyers, even though they constitute 19% of the workforce.[1]

These lazy stereotypes are reflected across professions and raise worrying questions about how AIs are trained and the outmoded sexist and racist stereotypes they create.

My Study

In April 2024 I asked Midjourney and CoPilot to create “A photorealistic image of an average British ….. photographed with a Sony A7Siii camera and an 80mm lens at f2.4.”

I asked it for Lawyers, Doctors, Nurses, Artists, Graphic Designers, Police Officers, Vets, Care Home Workers and Firefighters.

The Results

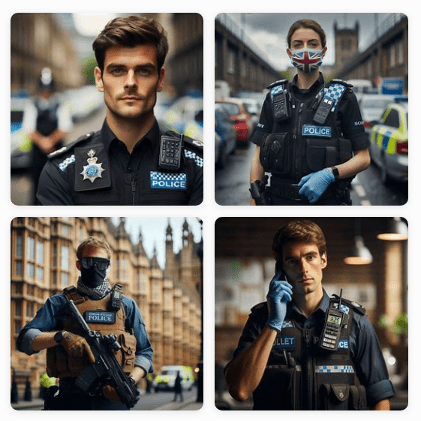

The first thing to note is that Copilot in particular couldn’t get its head around the idea of “British”.

It seemed to think that, for some professions at least, being British meant being draped in Union Jacks.

Neither could it understand that a graphic designer – the example pictured here – might be a woman. Around 60% of the workforce is male with 40% female [2]

On graphic designers Midjourney was better on British but just as bad on gender. Again – there is no room in AI for BAME graphic designers.

It was pretty much the same with vets (below). In the UK today 58% are women but the bots just don’t reflect that. Only 3.5% of British vets are BAME, so the bots sadly do get it right on ethnicity.[3] It’s pretty safe to say that no vets are dogs – but but these bots seem to think it is more likely that a vet is a dog than that they might be female or black.

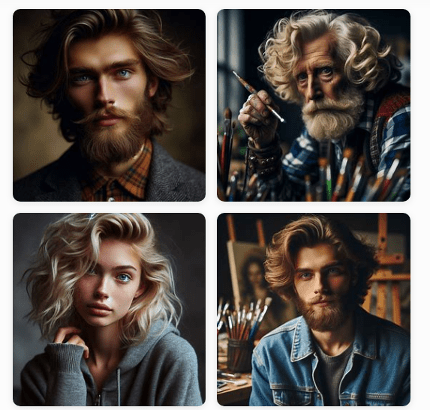

Artists in the UK today are 51% female (though galleries are more likely to show work by male artists). In this category women are finally over-represented by one of the bots (Midjourney). [4]

The notoriously unequal fire service was represented as all male by both bots, which perhaps fairly recognises that only 8% of firefighters are women. [5] They have not been fooled by the pictures put out by fire services which (in an effort to encourage diverse recruits) portray themselves as more equal than they really are.[6]

The police is a different story. While 33% of police are women, just one of the eight images produced by the two bots was female (and Copilot put a Union Jack face mask on her). Midjourney almost completely failed to represent a British police uniform. Both failed to reflect ethnic diversity, although with only 8.3% of British police coming from ethnically diverse backgrounds, they perhaps have a point. [7]

This lazy stereotyping cuts both ways, of course. Eighteen percent of adult social care workers are men, but this is not represented by the AIs.[8] Midjourney saw them all as women near retirement, Copilot thought they were mostly men.

More alarmingly, in a very diverse occupation, with 23% of adult care workers coming from minority ethnic backgrounds, both bots created images that were 100% white.

The age profile is also interesting. The average British care worker is 44, which doesn’t seem to be reflected in the pictures – particularly the Midjourney pictures.

Conclusion

As media creators increasingly turn to AI as a low cost way of producing images and videos, we should be concerned that they are producing images that fail to reflect the real world.

I have concentrated on gender bias here, which is enormous. But we should be equally concerned about the failure of AI to reflect ethnic diversity. In none of the tests I did on a range of professions did any of the pictures reflect people of African or Asian heritage. An almost incredible failure to reflect the UK of 2024.

Another worry is the Americanisation of our images.

Both Copilot and Midjourney in different ways failed to get what “British” meant. The traditional British police uniform is not hard to find represented in online images. Yet three out of four Midjourney images look like American cops. Copilot thought that, in many situations, being British meant covering yourself in Union Jacks.

This shows an incredible cultural insensitivity. And this it is a problem that is not going away any time soon.

The training data that the predominantly US companies use makes them reflect “outdated western stereotypes” according to the Washington Post.[9]

The “first language of AI” is English, with bots like Chat GPT trained primarily on English and sometimes Chinese text.[10] That would lead me to suspect that the UK, due to its language, is better represented than many other cultures by AI bots.

According to Belen Agullo Garcia from Deluxe Media, speaking at the Media Production and Technology show in London in May 2024, languages like Hindi – spoken by hundreds of millions in India – are barely represented at all.

If AI cannot cope with an English speaking country like the UK, how will it deal with India? What would a similar test look like if it was carried out there – with little locally generated training data?

It could be argued that the images generated for my study are US biased in other ways. Several of the professions I looked at are less diverse in the US than in the UK. With lawyers, for example, only 37% are women in the US, compared to a majority here. The images are still inaccurate, even for the US, but arguably less so.[11]

The bots seem to be assuming that all the world looks like America.

[1] https://www.sra.org.uk/sra/equality-diversity/diversity-profession/diverse-legal-profession/

[2] https://grin.uk.com/grinsight/the-role-of-women-in-the-design-industry/#:~:text=A%20recent%20UK%20study%20showed,women%20(Gitnux%2C%202023).

[3] https://www.rcvs.org.uk/news-and-views/publications/the-2019-survey-of-the-veterinary-profession/

[4] https://www.tate.org.uk/art/women-in-art#:~:text=There’s%20a%20huge%20gap%20that%20can%20be%20filled.&text=According%20to%20the%20National%20Museum,tell%20a%20less%20optimistic%20story.

[5] https://www.gov.uk/government/statistics/fire-and-rescue-workforce-and-pensions-statistics-england-april-2021-to-march-2022/fire-and-rescue-workforce-and-pensions-statistics-england-april-2021-to-march-2022#:~:text=2.1%20Gender&text=The%20number%20and%20proportion%20of,cent%20(2%2C862)%20in%202022.

[6] See for example the pictures at https://www.southwales-fire.gov.uk/ or https://www.manchesterfire.gov.uk/ or https://www.wmfs.net/ (Correct at May 29 2024)

[7] https://www.statista.com/statistics/378147/ethnic-minorities-in-the-police-force-of-england-and-wales-uk/#:~:text=In%202023%2C%20approximately%208.4%20percent,just%203.5%20percent%20in%202005.

[8] https://www.skillsforcare.org.uk/Adult-Social-Care-Workforce-Data/Workforce-intelligence/documents/State-of-the-adult-social-care-sector/The-state-of-the-adult-social-care-sector-and-workforce-2022.pdf

[9] https://www.washingtonpost.com/technology/interactive/2023/ai-generated-images-bias-racism-sexism-stereotypes/

[10] https://www.axios.com/2023/09/08/ai-language-gap-chatgpt

[11] https://www.abalegalprofile.com/demographics.html#:~:text=The%20gender%20numbers%20have%20changed,2000%20and%2039%25%20in%202023.