Nurses are sexy, doctors are male, and women are young and beautiful. Welcome to the world of AI content generation in 2024.

It’s not exactly breaking news that AI can reinforce prejudices already out there in society. What surprised me was now unresponsive it is when you attempt to get it out of these bad habits.

Like a caricature 1950s misogynist, you really have to give it a slap and tell it that, yes, that bloke is a nurse and, yes, the doctor is a woman.

Getting Started

I’ve been experimenting with AI image generation on Midjourney – a leading AI bot – and finally got the opportunity to use it on a project.

For the uninitiated Midjourney is currently one of the world’s leading stills creation AIs. If you know what you are doing, you can create stills like this – a hugely controversial photorealistic image of a Palestinian boy sitting among the rubble of Gaza. The image is a complete fantasy.

My experiments in AI up until this point had been attempting to make video out of AI generated stills. I’d come up with this.

She looked more supermodel than camera operator – but I didn’t ask for this. The prompt which I fed into Midjourney for the initial image – with diversity in mind – was: “Photorealistic image of young biracial female camera operator focusing a Sony PXW-FX9 camera which is standing on a tripod on a shingle beach. The shot is close-up on her face, the lens, and the viewfinder. She is wearing a warm black jacket and scarf”.

Pleased with the results, and failing to realise the significance of the picture, I went into action on a real project.

I was given a video to edit which had not been professionally filmed and there was no usable b-roll. Obviously, I didn’t want to enter the world of deep fakes, but some drawings or illustrations might have been nice to give the film some life (and to cover much needed edits!).

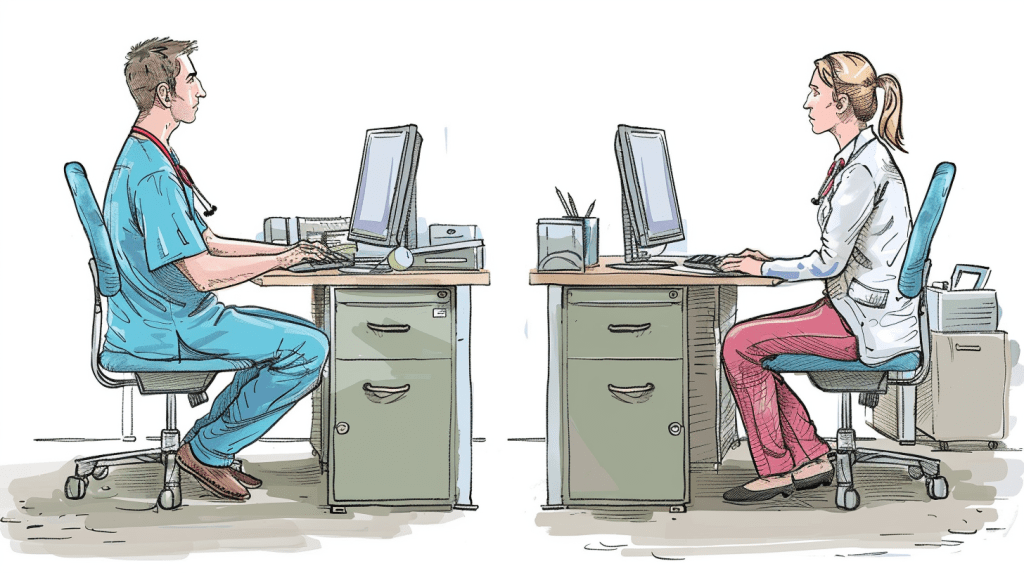

I needed a picture to go with an anecdote about two health workers who took weeks to refer patients between each other – even though they shared an office.

So, I asked Midjourney for the following: “Line cartoon of a nurse in uniform and a woman in smart casual clothes sitting the same office on their computers with their backs to each other and not talking to each other”. (The grammatical error was in the original prompt – I have left such errors in throughout)

I was initially pleased with the results:

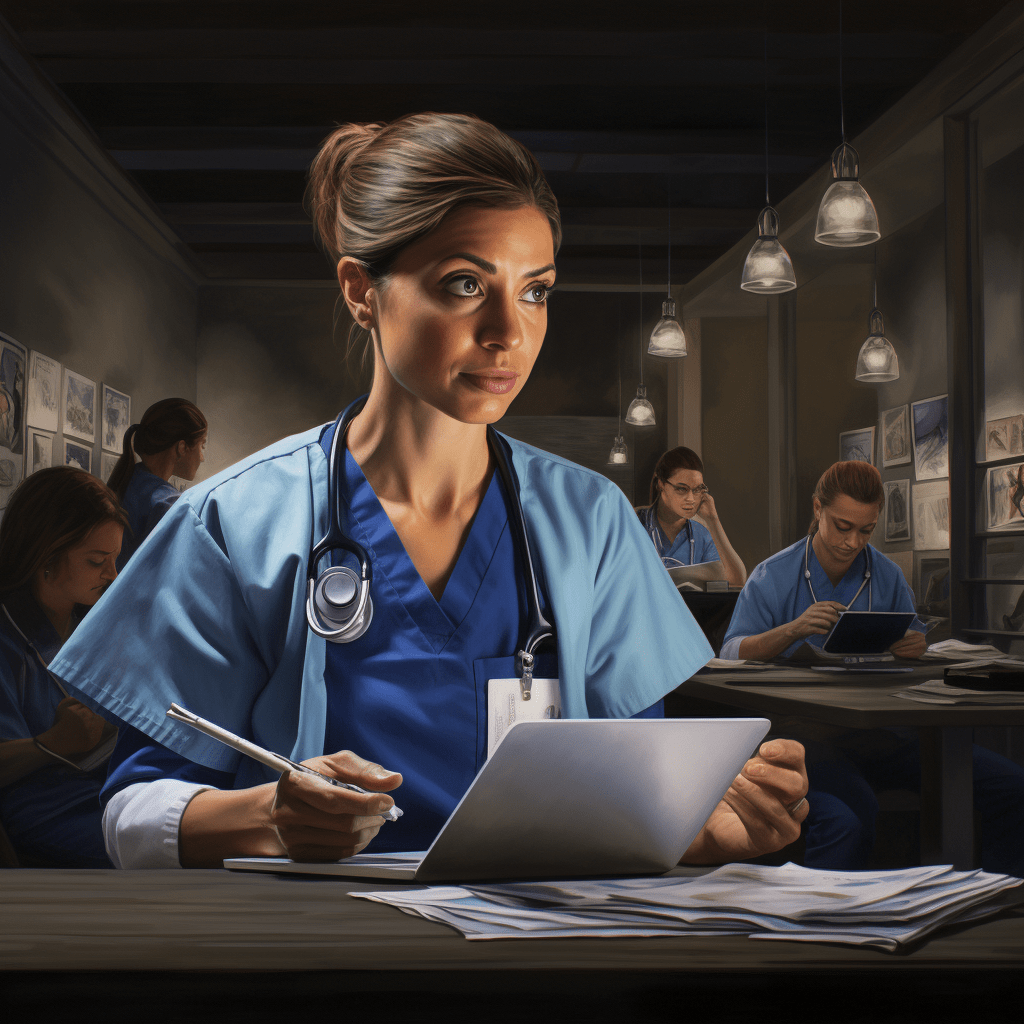

I pressed on and created an image for the start of the video to make it a bit more exciting. (The interviews were not well shot).

The prompt this time was: “Photorealistic image of female medical doctor in online meeting with other medical professionals.”

I did think this time that perhaps the doctor was a bit glamorous – but, hell, let’s see what the client thinks.

Of course, the client didn’t like them. She told me they reflected the “male gaze”. Why, I was asked, were the two women in the office so very young and very thin?

Well, as you can see from the prompts, that wasn’t what I asked for. But it does seem that asking for anything female (which I did to reflect diversity in the video) tends to create glamorous women.

To get a reality check here, statistics show that around a quarter of nurses in the UK are obese1, and more than half are aged 35-54, with the average nurse being in their mid 40s.2

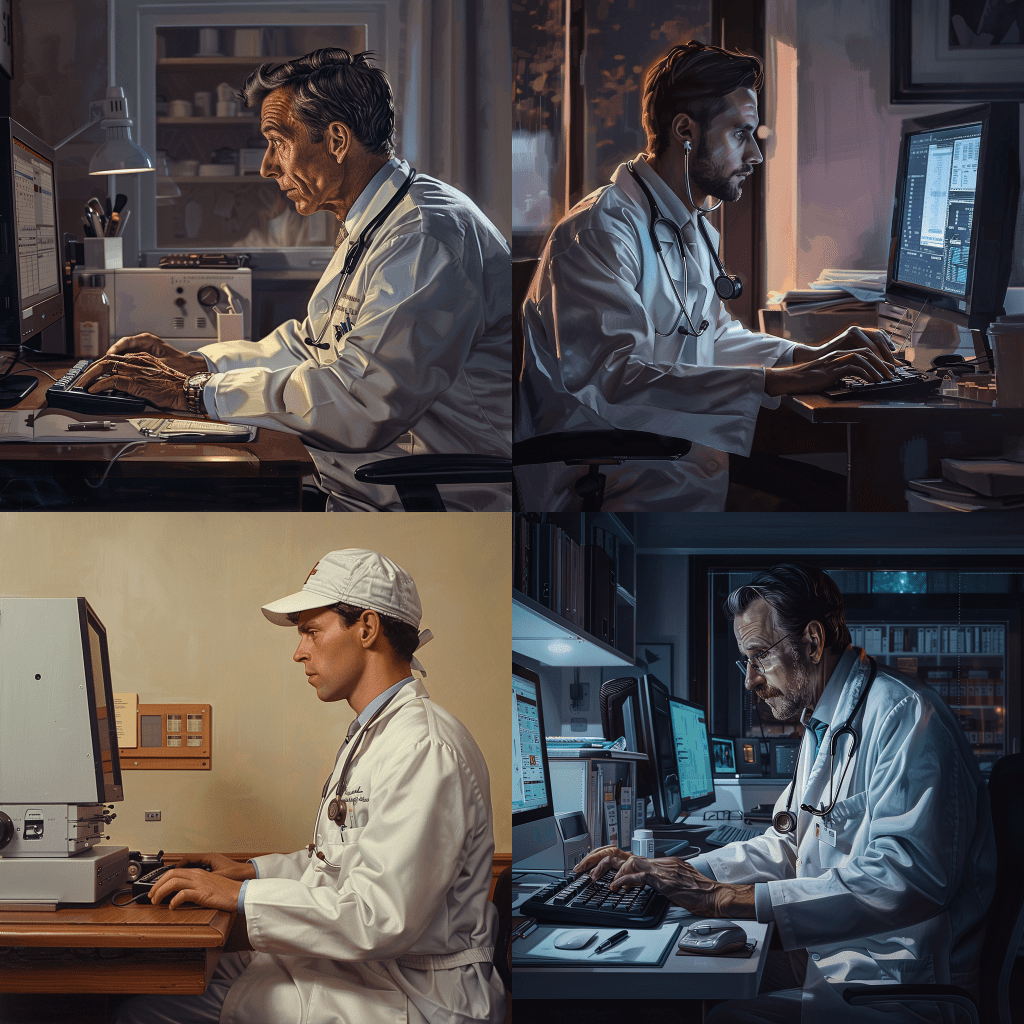

I found I did need to ask for diversity, as Midjourney is not good at creating it by itself. When, as a test, I asked Midjourney for: “A photorealistic picture of a doctor typing at a computer”, it created the following images. (Midjourney gives you four options which you can then manipulate further.)

Note that the doctors are not only male – but they are also not young, and to my eyes, not glamorous. They are also white.

Just to test again, I ran two requests through Midjourney. The first was: “A photorealistic image of a man standing on a street with shops in the background. This is a close-up of the man’s head and shoulders.” This is the result:

The second request asked the same prompt, changing “man” to “woman”: “A photorealistic image of a woman standing on a street with shops in the background. This is a close-up of the woman’s head and shoulders”.

I think the results speak for themselves. Midjourney interprets women, but not men, as young and glamorous.

So, getting back to my project, I needed to fix this. I wanted women. But women who look like someone you’d actually meet in the real world. They couldn’t, after the feedback I’d had, be too thin.

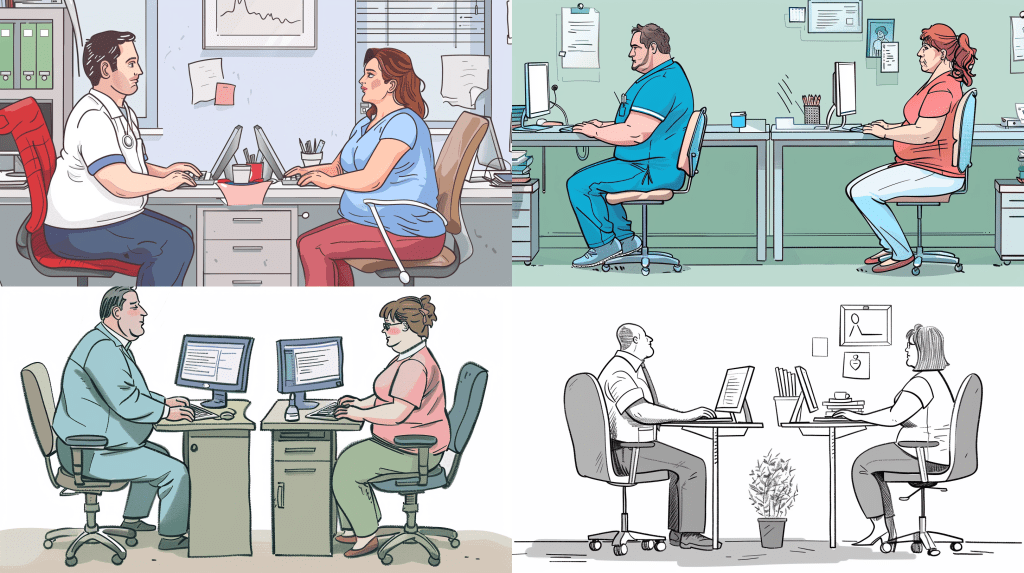

So, I tweaked my question to ask for: “Line cartoon of a middle-aged male nurse in uniform and a slightly overweight woman in her thirties in smart casual clothes sitting the same office on their computers with their backs to each other and not talking to each other”.

I got this, another extreme:

Eventually, I hit on a prompt that seemed to work a bit better: “Line cartoon of a middle-aged male nurse in uniform and a woman in her thirties in smart casual clothes sitting the same office on their computers with their backs to each other and not talking to each other”.

I’m not sure why it never gave me an option for people sitting back-to-back – but I’m not hugely experienced in designing prompts. More importantly, it shouldn’t be that hard to come up with realistic looking people.

As I carried on through the video there was a section I wanted to cover where nurses were struggling to get through on the phone to other parts of the care system. Midjourney seemed to have strong views on what nurses should look like.

The prompt (created in an attempt to avoid previous pitfalls and move away from the image of a glamorous young nurse) read: “Line cartoon of frustrated female nurse in her 40s on the phone waiting for an answer”. This was the result:

If we try for something a bit older still, Midjourney changes gear. It knows what old women look like. When I tried: “Line cartoon of an older female nurse talking on the telephone looking unhappy and frustrated”, I got this:

I tried to be explicit about what I wanted. “Line cartoon of a female nurse with average looks wearing no makeup in her 40s hanging on the telephone waiting for an answer.”

It seems that in the world of this leading generator of AI images, nurses are all look like models unless they are “older”, in which case they are very old indeed.

Why Could This Be?

AIs are trained on what is called training data. Something like Midjourney will look at millions of pictures so it knows what things look like.

The companies behind these bots use web crawlers which harvest vast quantities of data from the open internet – often without the permission of the owners of the images. The AIs may then regurgitate the biases in this data.3

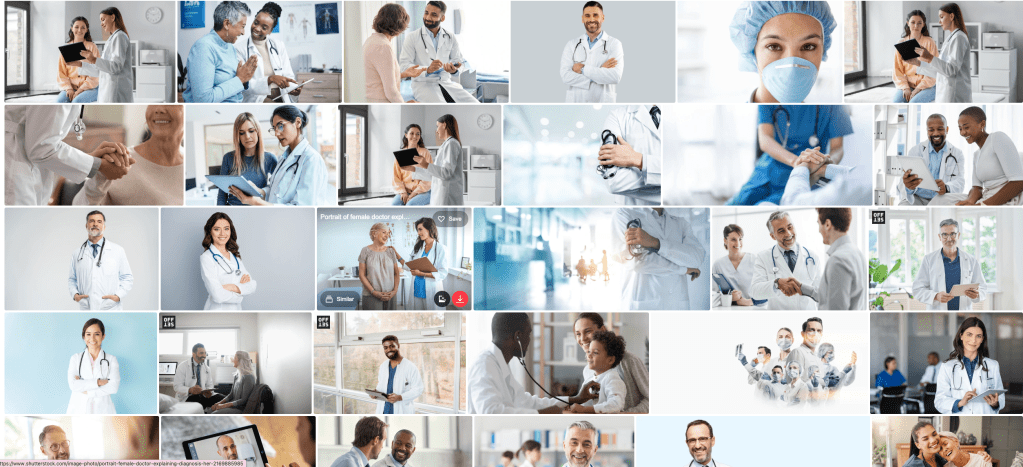

Pictures, libraries like Getty Images, Shutterstock and others must be a goldmine for AIs. They not only have millions of good clear images, but they also have detailed captions. If you want to know what a nurse looks like – it might be a good place to start. (Getty Images is just one organisation that is suing AI creators for unauthorised use of its millions of images.)4

But of course, there is a catch. And it’s one I have some experience of.

I contribute video to some of these sites, including Shutterstock. I get regular (very small) payments when my videos are used to train an AI. So, everything is above board, and I get compensated, which is good.

But the way to make money on sites like these is to produce images for “commercial usage”. These are pictures which can be used for anything – including advertising. The picture I take of a “nurse” could be used to promote healthy lifestyles, a hospital care plan or jobs in nursing. It’s fully licensed for almost anything (so long as its not obscene or abusive).

But there is the catch. If you are going to sign away rights to your image like that, you will normally want to be paid. Most of the millions of pictures and videos on these sites are posed by people who are paid to act as doctors, nurses or whatever.

So, the reason that nurses on these libraries look like models is because they ARE models. Very few are likely to be actual nurses.

I presume the photographers chose attractive younger models to pose as nurses because they believe they will get more sales … but that is another story.

These pictures, with their inbuild biases, are not only found on the picture library sites themselves. These pictures are propagated throughout the world on news websites and blogs, so the web crawlers will find the same biased images again and again.

If we look search for “nurse” on Shutterstock” this is a screengrab of page 1.

Outside of group shots, there are only two male nurses. The women are predominantly young and slim. The beautiful nurse, therefore becomes a self-fulfilling prophecy which is hard wired into AIs like Midjourney.5

For balance, I captured Shutterstock’s first 30 images when I searched for “Doctor”.

Of these 12 were female and one was a group shot, meaning there were 17 pictures of men. Of those, four were clearly “older” men with grey beards. None of the women appeared to me to be beyond her mid-30s, though it is obviously a subjective opinion.

It is easy to see how factors like these can lead AIs to misrepresent the world. In the UK in 2022 nearly half (47%) of doctors were women – most aged between 30 and 496. The images may be more representative of the USA, where just 37% of doctors were women in 20217. The world according to AI is not only more sexist, it’s possibly more American too.

Conclusion

It’s not new to say that real world biases are baked into AIs, and often amplified by them. What surprised me was that it was so hard to write prompts which got rid of these biases. I’m not expert in prompt-writing, but when you specifically ask for things that should be guiding the AI to create more balanced images, it really struggles. It just can’t get the concept of “sexy nurses” out of its head.

The web crawlers which feed AIs have been criticised for being indiscriminate in the way they gather data. Could they be looking at misogynist, homophobic, or racist websites, for example? In the secretive world of AI it is difficult to know.

But it seems to me that they don’t need to look at intentionally biased sites to get biased results.

If they look at major, mainstream legitimate sites, they find the commercially available imagery of women is of overwhelmingly young (or younger looking) women. It’s not surprising the AIs struggle to imagine the real world of diversity.

We should also remember that AIs are still a blunt instrument. The companies behind them are aware of their biases, and Google in particular has tried to make it’s Gemini AI more diverse. The results were not encouraging. While we might welcome more diverse pictures of nurses, the images they created of World War II German soldiers were pretty inappropriate.8

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8708316/#:~:text=Studies%20have%20shown%20that%20around,(23.5%25)%20%5B7%5D. ↩︎

- See for example: https://www.rcn.org.uk/-/media/Royal-College-Of-Nursing/Documents/Publications/2023/September/011-188.pdf

Or this: https://researchbriefings.files.parliament.uk/documents/CBP-9731/CBP-9731.pdf

The average of age “care sector worker” just over 43:

https://ww.nurses.co.uk/blog/stats-and-facts-uk-nursing-social-care-and-healthcare/ ↩︎ - https://www.scientificamerican.com/article/your-personal-information-is-probably-being-used-to-train-generative-ai-models/ ↩︎

- “Getty said its pictures are particularly valuable for AI training because of their image quality, variety of subject matter and detailed metadata.”

https://www.reuters.com/legal/getty-images-lawsuit-says-stability-ai-misused-photos-train-ai-2023-02-06/ ↩︎ - Getty, widely used by news organisations like the BBC, fare no better. The first page of images featured 32 images of “nurses”. Outside of group shots, there were only two men. To my eyes, none of the women seemed to be beyond their early 30s. They were mostly slim, and none were overweight. All the screenshots and statistics used here were from April 4 2024. ↩︎

- https://www.medicalwomensfederation.org.uk/our-work/facts-figures#:~:text=47%20per%20cent%20of%20doctors,41%20per%20cent%20in%202009. ↩︎

- https://www.aamc.org/data-reports/workforce/data/active-physicians-sex-specialty-2021 ↩︎

- https://www.theguardian.com/technology/2024/feb/22/google-pauses-ai-generated-images-of-people-after-ethnicity-criticism ↩︎

2 thoughts on “Sexy Nurses And Serious White Doctors – AI Content Creation in 2024”